How AI Actually Works: From QBasic to Neural Networks

Superthread founder David breaks down the history of AI, the battle between symbolic and neural camps, and how modern LLMs are transforming software development.

Mar 30, 2025

|

David Hasovic

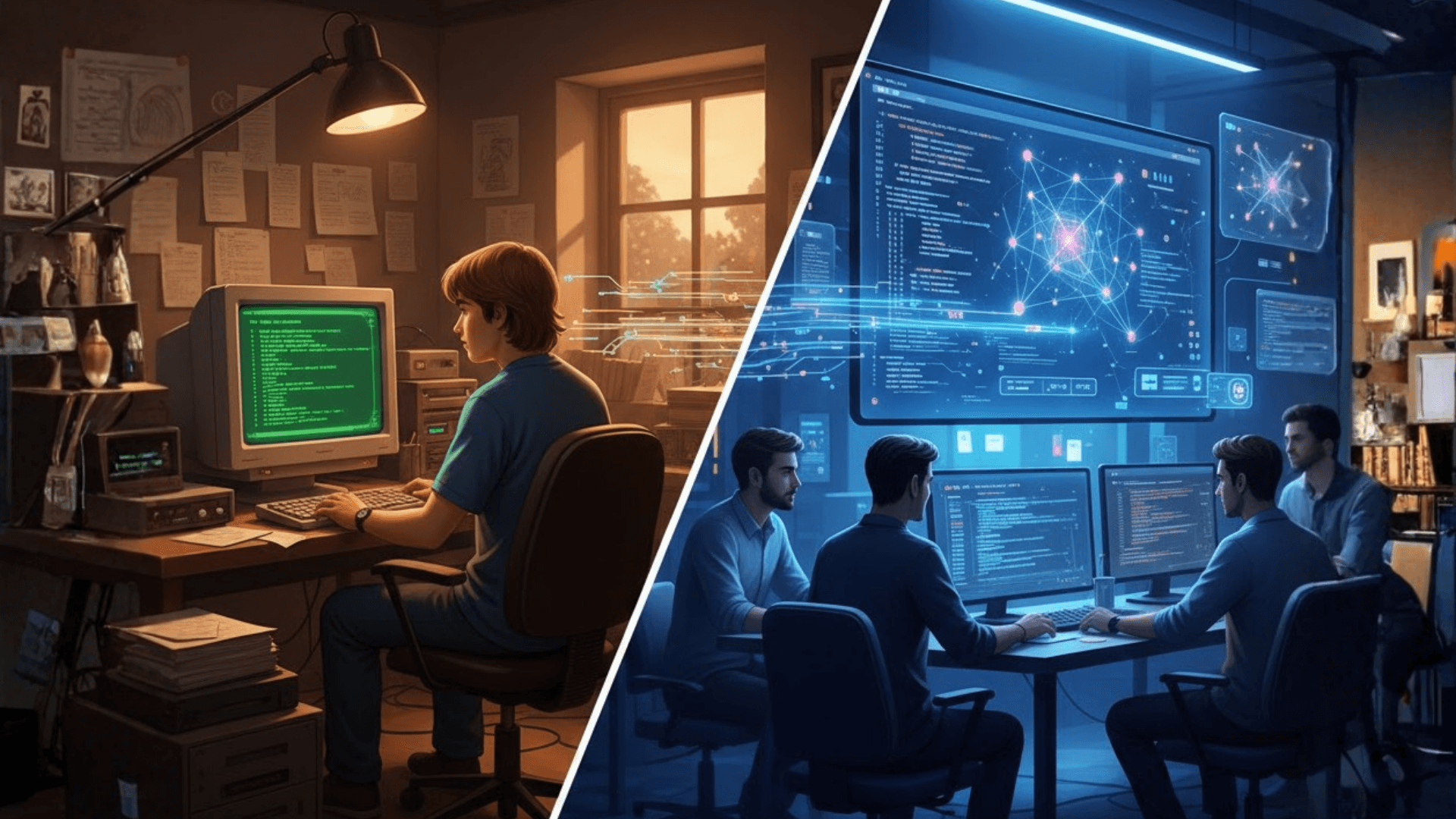

From Coding in QBasic to Building AI-First Software

At Superthread, we are using AI all day, every day. The product has fundamentally morphed into an AI-first tool.

For those who don't know, Superthread is a high-performance issue tracker with built-in documentation. It’s fast, polished, and modern. But the way people work inside these tools hasn't changed for a long time, until now. With the advent of AI, classical software is changing, and we believe Superthread is at the forefront of that innovation.

A Lifetime Obsession with AGI

I’ve been a computer scientist my whole life. I built my first chat program in 1996 as a kid in college using QBasic. I stayed up for nights typing in thousands of phrases, hoping that if someone spoke to it, I’d have the right response ready.

I think all computer scientists share this dream of creating Artificial General Intelligence (AGI). Later, at university, I specialized in AI. My teacher actually became a lifelong friend and got me my first job. During that time, my friends and I were building chat programs for competitions like the BBC’s Tomorrow’s World Mega Lab. My best friend’s group came in second with a program that could talk about nothing but Martin Scorsese movies. We’ve been at this for a long time.

The Great AI War: Symbolic vs. Neural Networks

Historically, there have been two main camps in AI:

The Symbolic Camp: Led by the late Marvin Minsky, one of the smartest people to ever live. They believed AI should be built on logic, symbols, and language.

The Artificial Neural Networks Camp: Led by Geoffrey Hinton. This approach attempts to copy the way neurons work in the human brain.

We are all living in Geoffrey Hinton’s world now. The symbolic camp is mostly dormant. In 2024, Geoffrey Hinton won the Nobel Prize in Physics for his work on neural networks, and Demis Hassabis (CEO of DeepMind) won the Nobel Prize in Chemistry. The 'Connectionist' approach has officially won.

How ChatGPT and LLMs Actually Work

The field hasn’t fundamentally moved forward through new logic; it’s moved forward by feeding massive amounts of data into models using the 'Transformer' architecture (from the 2017 paper Attention Is All You Need).

Here is the simple breakdown of how these systems function:

The Brain Analogy: Our brains have neurons connected by synapses. Artificial neural networks have layers: an input layer, an output layer, and many 'hidden' layers in between.

Weights and Training: Each connection has a 'weight.' Training involves using massive GPU power to adjust these weights until the output is correct.

Data Quality: You need high-quality data. Companies like DeepSeek have recently made waves by using 'distillation', basically having their AI talk to GPT-4 to learn from its outputs rather than cleaning raw data themselves. It’s cheaper, but the legality of using copyrighted material is still a major debate.

AI in Practice: Function Calling and Augmented Development

At Superthread, we use function calling. This allows ChatGPT to perform actual tasks via a programming interface for endpoints that don’t even exist in our software yet. It’s incredibly powerful, though you have to watch out for hallucinations.

For my own programming, I use Claude 3.7 inside Cursor AI. The more you use it, the better it gets because it learns from the code you accept or reject. We are seeing a massive augmentation of what a single developer can achieve.

The singularity, the point where machines become smarter than humans, was predicted by Ray Kurzweil to happen by 2029. Given how fast things are moving since ChatGPT, that timeline is looking more and more realistic.

Experience the future of AI-powered project management.

Don't get left behind by legacy tools. Join the teams building faster with Superthread. Sign up for Superthread for free